An Iterative Approach to Developing the Collaborating, Learning and Adapting Maturity Tool

This summary of developing the CLA Maturity Tool was originally submitted as a case to the 2016 CLA Case Competition. It’s been repurposed here as a blog and includes some updates to reflect more recent developments.

The CLA Maturity Tool was co-created between PPL and LEARN, PPL’s contractor. Team members from both organizations shared ownership of the design process and responsibility to create the tool.

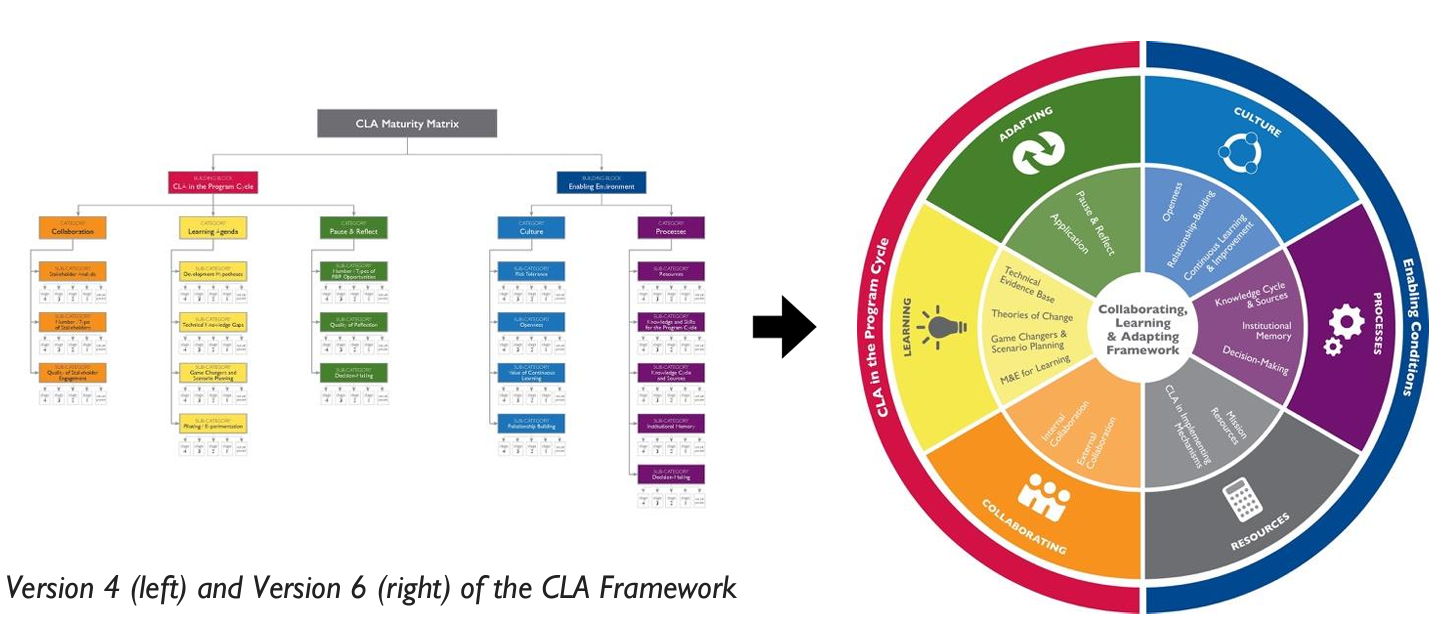

We piloted the tool with a number of stakeholders to get feedback and used that feedback to iteratively improve the tool and approach. Feedback sessions were held within PPL as well as with other bureaus, missions, and implementing partners. We first introduced version 4 (our minimum viable product) in West Africa. Based on the feedback and experience there, we then created version 5, which was used in Uganda and the Regional Development Mission in Asia. These experiences confirmed we had a viable product and gave us important feedback that fed into version 6, the version still currently in use. We used version 6 in an additional 5 missions—DRC, Cambodia, Southern Africa, India, and Ethiopia—and are tracking feedback received from these experiences to inform version 7, which will come out with the ADS revisions in the fall of 2016.

The creation of the tool was made possible because of enabling conditions within PPL and LEARN. Those working on the tool had a collaborative partnership where an open flow of feedback was the norm. We were also given space by our leadership to experiment. From humble origins as a boring matrix in a Google Sheet, it eventually transformed into a deck of cards that enabled participants to interact with the content in more dynamic ways.

Knowledge management was also critical throughout the process. Feedback from stakeholders was synthesized and reflected upon constantly to improve the content of the cards and the facilitation experience. We have also kept a change log throughout the process to document significant adaptations to the tool and what led to those changes. The core team creating the tool was small and decision-making roles within the team were clear, avoiding delays in adapting the tool as feedback flowed in. We were provided resources to have the cards graphically designed to make it a richer, more interesting experience for participants, and team members were given the time necessary to devote to working on and completing multiple iterations of the tool.

How was adaptive management built into the process?

One of the unique characteristics of this initiative was not knowing what the tool would ultimately be or look like. We did not start out with a clear blueprint and did not have a clear sense of how long the process would take. Because of the flexibility given to us by leadership, we were able to update plans as we went and did not have specific deadlines in mind. From there, the tool took on a life of its own. Deadlines emerged because of upcoming technical assistance trips to missions, which prompted us to want an updated version of the tool based on the feedback we had received up to that point. For those working on the tool, this lack of clarity was not problematic. Rather, it provided freedom to take the tool where it needed to go based on stakeholder input.

Another example of this is the creation of the CLA framework out of the CLA Maturity Tool. When we started creating the tool, we knew we needed an organizing framework, but we did not set out to create one as an explicit aim. However, in creating the tool, a clear framework emerged. The version 4 and 5 frameworks (see below) were simply organizational charts used by our graphic designer to understand the content and provide updated designs. And we defaulted to using this visual to explain the framework when piloting the tool out in the field. We quickly heard from participants that this representation gave the impression that aspects of CLA were siloed rather than integrated. We then began thinking about how we could represent the framework in a way that presented a more holistic view of CLA and the interconnected nature of the framework components, resulting in today’s version 6 framework graphic. We now use this framework to organize CLA trainings, update to how Learning Lab is organized, and in thinking about the tools and resources we need to provide to support greater CLA integration.

Another key challenge has been moving teams from the excellent conversation generated by the self-assessment process to action planning (and actually executing those action plans). In many cases, we first had difficulty getting missions to the self-assessment sessions due to a lack of time and uncertainty about the process. Then, once people came, they found the conversation incredibly engaging, useful, and relevant (and when asked, almost all say they would have devoted more time to the process after having experienced it). But by that point it can be too late to leverage the self-assessment discussion for action planning—the team visiting the mission may not have any additional time in their schedule to facilitate action planning or mission staff may not have time while the team is still in-country. Even in the few cases where missions have allocated sufficient time for both the self-assessment and action planning portions of the process, staff find it difficult to find the time and mental space needed to execute those plans. This is, to a great extent, to be expected given how challenging organizational change can be.

What change has resulted from the use of the Maturity Tool?

LEARN has established monitoring protocols to determine if the CLA Maturity Tool sessions at missions lead to tangible results. While the challenge of executing action plans remains a concern, we have identified a number of positive outcomes and results based on feedback from missions and other testers:

- Based on after action reviews with missions and follow-up protocols in place, we know from mission staff that the tool itself is engaging, interactive, and useful. Participants have indicated that their understanding of and interest in CLA increases as a result of working through the self-assessment. Specifically, staff have highlighted how the tool brings many enabling conditions (particularly within the culture component) to light that they had not previously considered when thinking about Program Cycle processes. They have also highlighted how the tool helped clarify what CLA is and is not in a way that effectively socialized CLA within the mission.

- In the case of one mission, we have a tangible outcome as a result of their self-assessment and action planning session. The team decided to integrate CLA into their annual program statement as part of the evaluation criteria (within the technical approach). This could have a ripple effect, leading to greater integration of CLA among implementing partners and changes in how programs are implemented. Another mission told us, “the CLA TDY was one of the best we’ve had because it led to immediate changes in how we operate." Further follow-up is needed to get details on what specifically changed at that mission.

- USAID staff in Washington have indicated (anecdotally) an improved understanding of CLA since the release of the framework and maturity tool. We have received requests from various USAID operating units to talk to them about the framework and maturity tool. It has also made it easier to find points of collaboration with other operating units (including a new collaboration under development between PPL, DRG Center, LocalWorks, the Lab, and Forestry and Biodiversity) on measuring the effect of collaborating, learning, and adapting approaches on development.

- Implementing partners are also seeking more guidance and information from PPL and LEARN on CLA as a result of engaging implementing partner focus groups in tool testing. Some implementing partners have asked about using the tool with their AORs/CORs as a way to discuss how CLA can be better integrated in their mechanisms. In addition, other organizations are considering applying the CLA Maturity Tool or creating a similar tool specific to their organizational context. (Update: since 2016, an Implementing Partner version of the CLA Maturity Tool has been released!). Other contractors who work with missions on strategic planning and CLA integration are also using the framework and maturity tool to inform their technical support to missions.

- The profile of CLA within the international development field has also increased and is being referenced by thought leaders in the organizational learning and adaptive management fields. This could be due to a number of factors, but one of them is likely the creation of a CLA framework and maturity tool that clearly defines what CLA is and what CLA practices look like.

To read more about reflections on how the CLA Maturity Tool was used, stay tuned to read the upcoming blog.